NASA Artemis Mission | Mineral Investigation

NASA Artemis Mission | Mineral Investigation

Mankind wants to fly to the moon again - how wonderful! Unfortunately, I was far too young to witness the first moon landing live. That’s why I decided to take part in the #2 Moon Run myself. But in the end, our team didn’t make it to the moon with the project. 🥲 Still, it was a great effort!

In April 2020, NASA launched the Honey, I Shrunk the NASA-Payload challenge to develop a payload for a small lunar rover. This rover will operate in an as-yet-undetermined lunar region as part of the Artemis mission, which is laying the groundwork for crewed lunar missions starting in 2024.

Fortunately, I know someone from KSAT Stuttgart, a small satellite group at the University of Stuttgart. And one thing led to another, and suddenly, I found myself on the software team!

The Challenge

NASA's Artemis Program aims to establish a long-term human presence on the Moon.

Since resupply missions are costly, future astronauts must be able to locate, extract, and transform lunar resources into essential materials such as oxygen, water, construction materials, and fuel.

To support this mission, miniaturized payloads that can be deployed on small lunar rovers have to be developed. These payloads must help prospect, map or characterize lunar resources.

The primary difficulty lies in miniaturization, because current payloads are too big, heavy, and power-hungry for NASA’s new mini rovers.

Our Idea: Google Maps for Minerals on the Moon

With our payload called MICU (Mineral Investigation Camera using Ultra-Violet), we wanted to take lunar research to the next level

MICU detects fluorescence of minerals using a stereovision camera setup and by emitting ultraviolet radiation in several wavelengths.

With the help of an AI model, resources can be classified and mapped onto a lunar map, allowing future astronauts to explore and extract these resources.

How it works

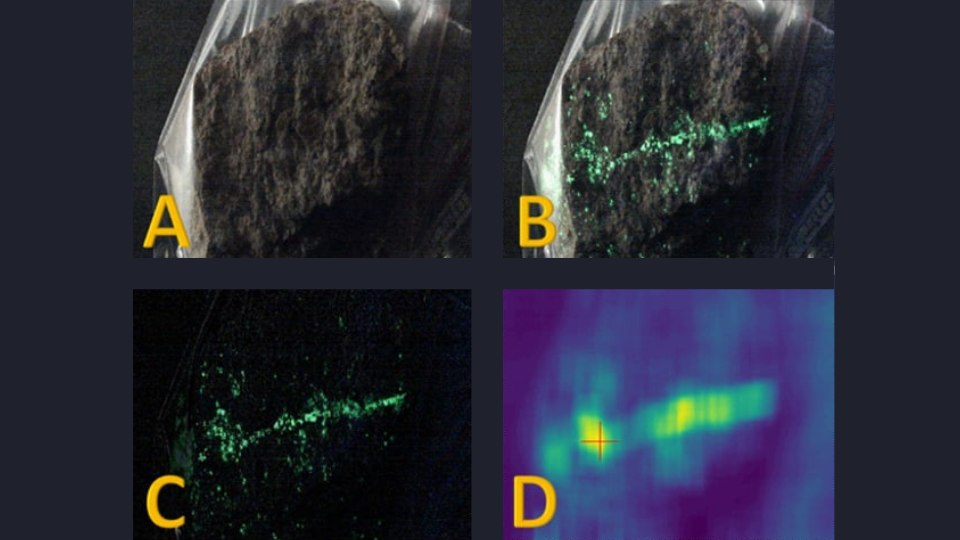

When minerals are exposed to ultraviolet light, some samples emit a glow in various intensities and colors. It excites electrons in the mineral, causing them to jump to a higher energy level. When the electrons return to their normal state, they release light. This phenomenon is known as fluorescence.

MICU uses this process to detect minerals on the Moon. It shines ultraviolet light onto the lunar surface and detects the fluorescence with a camera.

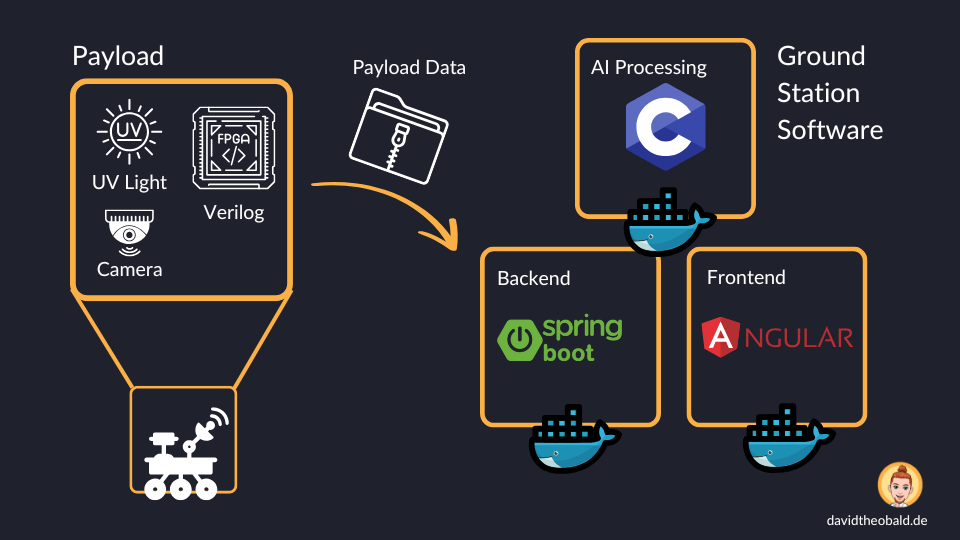

This approach uses image processing on a Field-programmable gate array (FPGA) within the payload to detect fluorescence. The FPGA then sends the images and metadata to the ground station on Earth for further processing.

The received data can then be labeled using images from a pre-created mineral database to train an AI, enabling it to automatically identify resources in the future.

Hardware

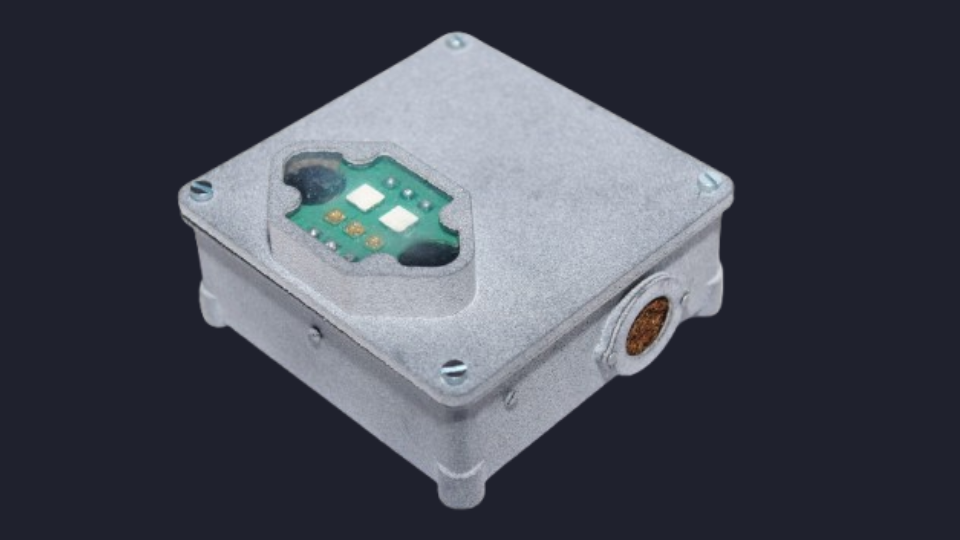

Designed for a moon mission, the payload had to meet stringent requirements. The primary goal was to achieve Technology Readiness Level 3 or higher.

The payload faced strict constraints on size, weight, and power. It was limited to a maximum size of 100mm × 100mm × 50mm, a weight of 0.4kg, and had to operate reliably in extreme lunar temperatures ranging from -120°C to +100°C.

Software

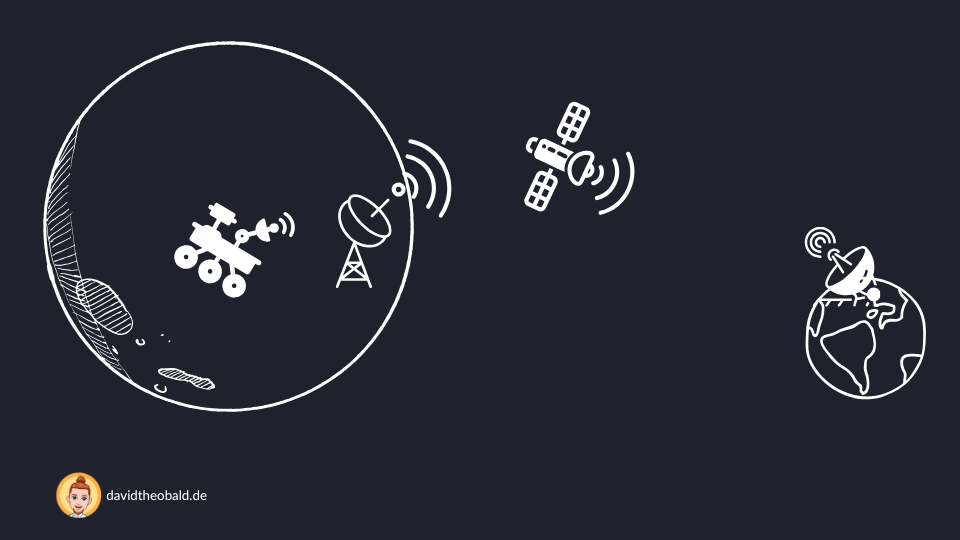

Retrieving our data requires overcoming a long journey. Our payload communicates with the mini rover’s interface, which then transfers the data to the lunar station. From there, the data is sent to an orbiting satellite, which relays it toward Earth. The Earth ground station receives the data and processes it accordingly.

Unfortunately, we can only access the data after the experiment has been conducted. During the experiment, the rovers navigate autonomously across the Moon, and we have no way to intervene. This means our payload must not only handle all possible conditions but also operate entirely independently without remote control.

The data is provided by the NASA ground station in a ZIP file. These files need to be read accordingly. The backend processes the data and provides the API for the frontend, enabling NASA employees to classify the images to train the AI model.

For the classification, we provide a frontend that allows comparing the lunar resource images with pictures from our pre-created mineral database.

Additionally, using a lunar map and the rover's metadata, a map of the identified resources can be created around the landing zone.

Failed Take off

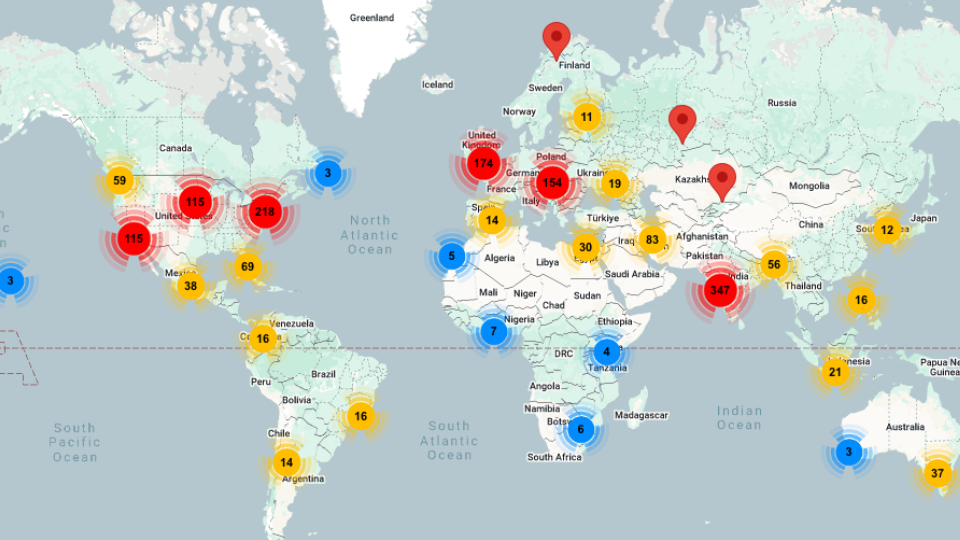

Although we secured 3rd place out of 168 teams worldwide in the first round of the challenge and won a substantial prize for developing our prototype, we were unable to convince in the final round.

As a result, we won’t be going to the Moon, nor will we get to witness the rocket launch in person. 😞

Still, this project was an incredibly enriching experience for me. I had the opportunity to learn what it truly means to develop space-grade software and hardware.